Why robots.txt in SEO? How does that work?

Introduction

Robots.txt is a file which you can use to control how search engines crawl your site and what pages they should not be allowed to index. For example, if you have a page on your website that is only for employees of your company, then you can block search engine crawlers from accessing this page using robots.txt.

What are search engine crawlers?

- Search engine crawlers are software programs that search the internet for new content, links, and other elements. They follow links on your website to find new pages, which they then index in their databases so that users can search them.

- Crawlers are also called spiders or robots. They’re used by search engines like Google to find new content for their search results.

How robots.txt works?

Robots.txt is a text file that’s placed on your website to control how search engine crawlers (bots) will interact with your site. The robots.txt file contains rules that tell the bots what they can and cannot access on your site.

For example, if you don’t want Google or Bing to crawl specific parts of your site, you can add a Disallow rule in the robots.txt file blocking those URLs from being indexed by these search engines. If you do want them crawling those pages though, then you’ll need to add an Allow rule allowing access for specific bots at certain times of day/night or even just during certain months (so they know when not to index new content).

Format of robots.txt

In case you’re not familiar with robots.txt, it’s a file that lists all of the pages on your website that search engines should not access. It’s located in the root directory of your website, usually named “robots.txt”. The first line in this file is always:

“`User-agent: *

“`This line tells search engines that this is how they identify themselves to be able to read the rest of your robots.txt file. The second line in this text document is called “Disallow“; here you can tell search engines what they shouldn’t crawl or index (basically don’t access).

For example, if you have a page on your website that’s just full of images, you don’t want search engines to access it because they would just waste time trying to index those images. So instead of crawling the page and wasting their resources, you can add this line: “`Disallow: /images/ “`This will tell search engines not to access any pages with “images” in their directory name.

What to add in robots.txt?

You can’t use robots.txt to change the content or structure of your site, so don’t try!

You should only use robots.txt to block or allow access to specific pages on your site.

Here are some examples of how you might be able to use it:

- You want search engines like Google and Bing to crawl all of your pages except for /blog/. You would add the following line in the robots.txt file at root level:

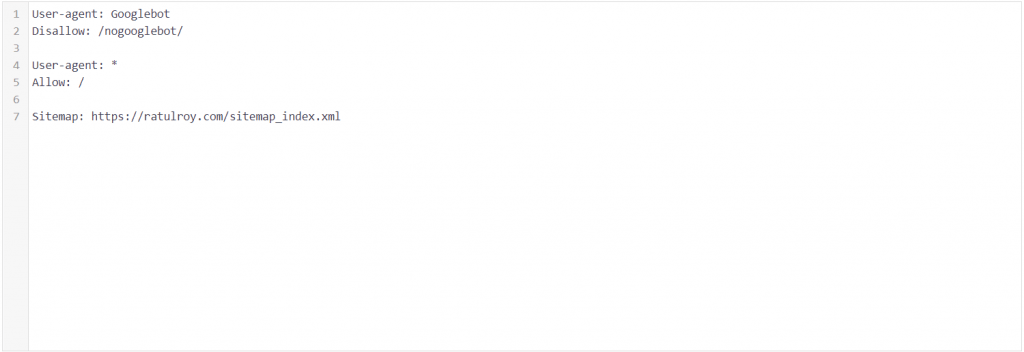

User-agent: * Disallow: /blog/ You would then submit a request to Google Webmaster Tools so that it knows about your robots.txt file. You can find out how to do this here: https://support.google.com/webmasters

Robots.txt Guideline By Google

Why use robots.txt?

In general, robots.txt is a file that allows you to control what the search engine crawlers do on your site. It allows you to prevent search engines from indexing certain parts of your site, and it also allows you to prevent them from caching (or storing copies of) those files on their servers.

The first benefit is obvious: You can block search engines from crawling parts of your site as needed. This is useful because some sites have portions that serve no purpose except for internal use—for example, blogs or forums where users post content but are not meant to be seen by anyone besides logged-in users only (i.e., they don’t show up in Google’s SERPs). You don’t want those pages showing up in search results either!

The robots.txt file is used to tell the web crawler what should and what should not be crawled on your site.

The robots.txt file is a text file that you can place in the root directory of your website. It’s used to tell the web crawler what should and what should not be crawled on your site.

For example, if you do not want Googlebot to crawl any specific page or folder (or all folders above it), add a Disallow directive with a path such as /folder/ or /*page*/ or /*folder*/ or /*folder/*/.

Conclusion

In this article, you have learned about Robots.txt in SEO and how it is used to control the crawler’s access to your website. It is important to know that the robots.txt file should be placed at the root level of your site, not inside any subdirectory (like “blog” or “articles”) because then only that directory will be crawled by search engines (not the rest of your website).

For Any Query Knock Ratul Roy