Ambulance BD 24 is a leading ambulance service provider in...

Read MoreRatul Roy - SEO Expert in Bangladesh

Welcome to the world of SEO magic, where age is just a number and success knows no bounds! Meet Ratul Roy, the Youngest White Hat SEO Expert in Bangladesh, the prodigy who kicked off his journey as a freelance SEO specialist in Bangladesh at the young age of 16. Still juggling textbooks and algorithms, Ratul’s story is one of determination, innovation, and boundless ambition. Are you ready to join him on an interactive journey through the fascinating world of SEO? Let’s dive in!

Ratul Roy - Youngest SEO Expert in Bangladesh

Ratul Roy is the youngest SEO expert in Bangladesh and an experienced SEO specialist. 3 years ago, I got involved with online marketing or the digital marketing industry and started freelancing in Fiverr. At the time of freelancing, basically, I worked individually and completed 30+ SEO projects and I had been a level 1 seller in Fiverr ( Still now I am a level 1 seller). Then I joined Netmark (Norway-based Web development & Marketing Agency) as a Senior SEO Strategist & team leader. In there I got the chance to work with top fortune local businesses in Norway. Then I made a team of SEO experts and Digital marketers and provided our services worldwide and we are working with many fortune companies. By starting my journey as an SEO specialist, and after successfully working with a lot of clients, now I am working with my team and trying to provide something better with my best effort like a reputed Digital Marketing Agency. I love to learn new things and I keep myself always updated. My first priority is to provide the best white hat services to my clients.

Experience

Learning SEO & Internship

I embarked on my journey in the world of SEO by learning the ins and outs of search engine optimization. I gained practical experience through internships, honing my skills and understanding of SEO strategies.

Fiverr

Building upon my initial SEO knowledge, I began offering my services on Fiverr. With dedication and expertise, I attained the prestigious Level 2 seller status, earning recognition for my quality work and customer satisfaction.

SEO Manager at NETMARK

As my expertise grew, I progressed to the role of SEO Manager at NETMARK, a prominent Norway-based agency. In this position, I led and executed successful SEO campaigns for clients, driving organic growth and enhancing online visibility.

Local Projects

Recognizing the power of collaboration, I expanded my reach by assembling a skilled team. Together, we provided comprehensive SEO services, combining our expertise to deliver exceptional results for our clients.

Getting Partnership with DigiPolli

Building upon my success, I formed a valuable partnership with DigiPolli, an esteemed agency. This partnership allowed me to leverage their resources, expand my client base, and further enhance the scope and impact of my SEO services.

Our Simple and Effective SEO Process

Through these simple yet effective steps, our SEO service will elevate your website's visibility, attract targeted traffic, and drive sustainable growth for your business. Let's embark on this journey together and unlock your website's true potential!

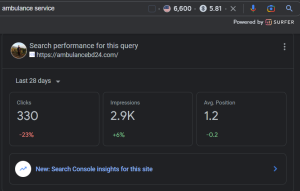

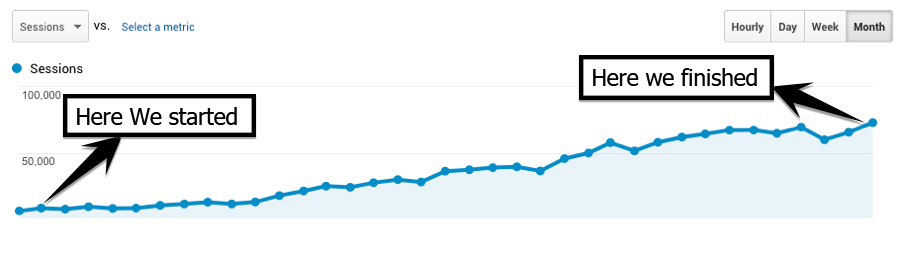

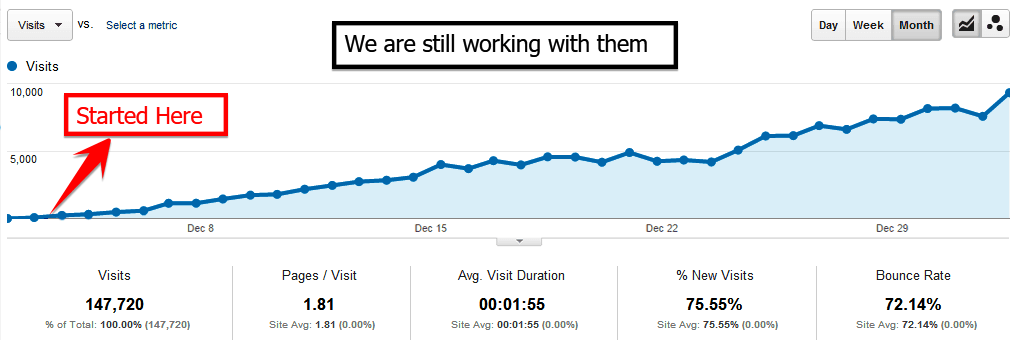

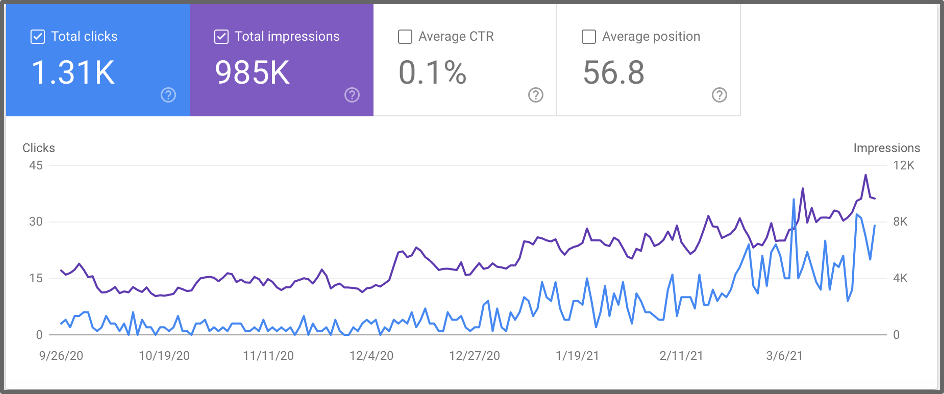

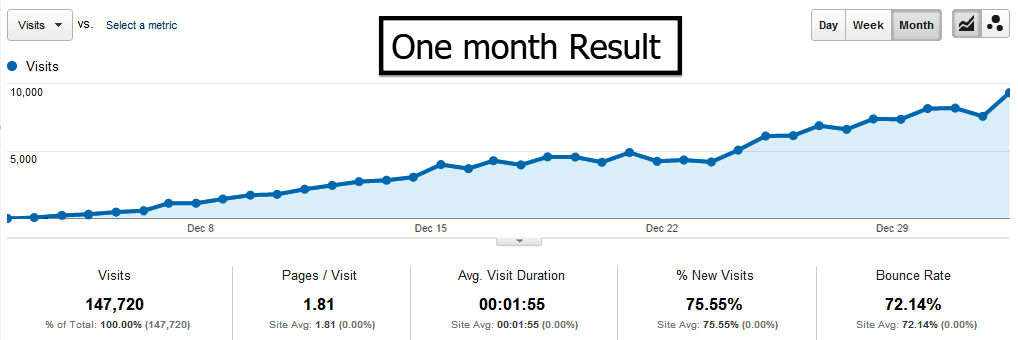

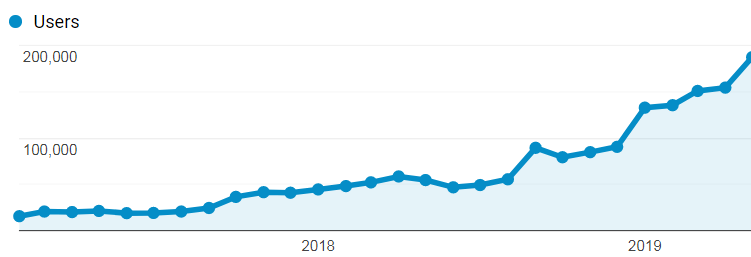

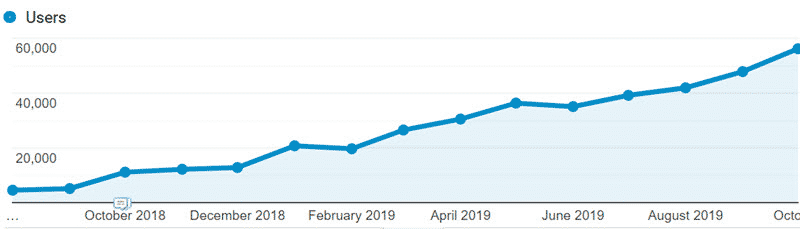

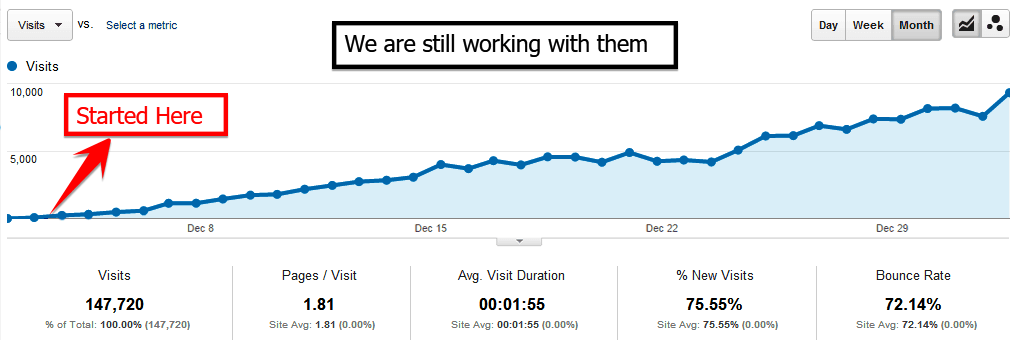

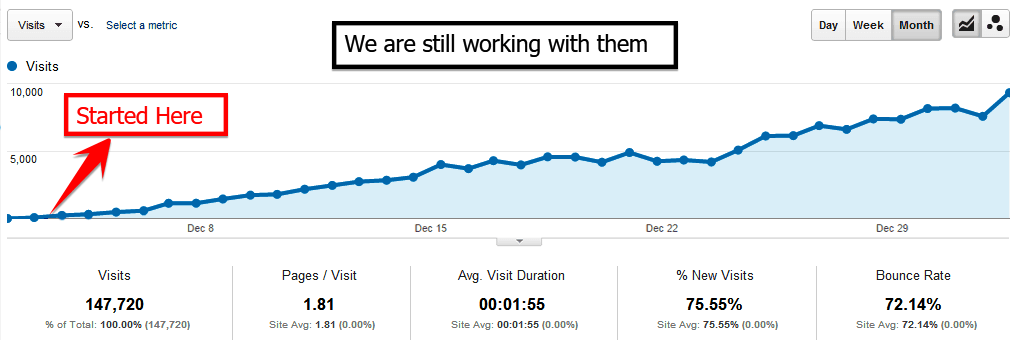

Some Of the Results and performance

We strive to deliver results to our clients rather than just promises. It proves our success rate. We are formulated with highly qualified strategists who make your website robust against the ever-changing Google Algorithms.

We have worked with

Our White Hat SEO Services

We specialize in implementing ethical and white hat SEO techniques to improve your website's visibility and rankings in search engine results. Our team focuses on optimizing your website's on-page elements, content, and backlink profile to attract organic traffic and ensure long-term success. With our white hat SEO strategies, you can build a strong online presence while adhering to search engine guidelines.

Local SEO

Dominate local search results, attract foot traffic, and generate leads with our location-specific SEO strategies.

E-Commerce SEO

Increase online sales and visitors with our specialized, tailored SEO services for e-commerce websites.

Blog SEO

Reach a wider audience, establish thought leadership, and drive valuable traffic to your blog.

Our Exclusive Features

With these unique features, our services stands out from the crowd, delivering exceptional value and driving your online success.

Exclusive Local SEO Service

Are you a business owner looking to dominate your local market? Do you want to attract more customers, increase your online visibility, and stay ahead of your competitors? Look no further! Ratul Roy, your trusted local SEO expert, is here to help you achieve your goals.

With years of experience in the ever-evolving field of search engine optimization, I understand the unique challenges that local businesses face. I have successfully assisted numerous businesses in optimizing their online presence and driving targeted traffic to their websites.

What sets my local SEO services apart is their exclusivity. I believe in providing personalized solutions tailored specifically to your business needs. Unlike generic SEO strategies that may overlook the importance of local search, my approach focuses on maximizing your visibility in local search results.

SEO Case Study

TechicTalk – How we ranked 100+ Keywords within 1 Months?

Our Client TechicTalk is the tech blog that provides news...

Read MoreNo wonder if SEO is used in the right ways, it can work like magic if used the correct methods. The team of Ratul Roy knows all the magic spells to help you reach your goals and reach the maximum number of audiences. With extensive SEO knowledge and years of experience, we provide 100% organic and white hat SEO services to our clients. We are one of the leading white hat SEO service provider that help you increase your sales and revenue with SEO. Get higher rankings everywhere with our remarkable services. Our quality content will make sure that your business ranks higher every single day.

Client's Satisfaction is our Ultimate Goal

We listen to our client’s queries and help them answer and give them a solution. For us, your business matters, and our goal is to increase your sales and revenue. Our mission is to provide the best Search Engine Optimization services and be the SEO Guru in the digital world.

We do not rest until the work is done and our clients are satisfied. We offer excellent packages to our clients with reasonable pricing and provide quality services. And that is what makes us the best choice.

Happy Clients

What our clients say about us?

Let's see with live proof

Why choose us?

Don’t let your website get lost in the vast digital abyss. Choose me as your SEO partner, and together, we will unlock the true potential of your online presence. Get ready to rise above the competition and achieve unrivalled success. Contact me today and let’s embark on an exciting journey towards digital greatness!

With years of industry experience and a deep understanding of search engine algorithms, I possess the expertise needed to optimize your website for maximum visibility. I stay on top of the ever-evolving SEO landscape, ensuring that your business stays one step ahead of the competition.

Every business is unique, and your SEO strategy should reflect that. I take the time to understand your brand, target audience, and business goals, crafting personalized strategies that align perfectly with your vision. Together, we will unleash the true potential of your website.

In the dynamic world of SEO, staying stagnant is not an option. I utilize the latest tools, techniques, and best practices to deliver exceptional results. From on-page optimization to link building and content marketing, I employ a comprehensive approach to boost your website’s search engine rankings.

I believe in achieving long-term success through ethical means. Unlike dubious providers who resort to black hat tactics, I adhere to industry standards and guidelines set by search engines. Your website’s reputation is safe in my hands, ensuring sustainable growth that withstands algorithm updates.

Communication is the key to a successful partnership. You will always be kept in the loop with detailed reports and regular updates on the progress of your SEO campaigns. I am readily available to address any questions or concerns, providing you with peace of mind and confidence in your investment.

Results speak louder than words, and my track record is a testament to my capabilities. Countless satisfied clients have experienced dramatic improvements in their website’s visibility, organic traffic, and conversion rates. By choosing me, you align yourself with a proven winner.

Investing in SEO is not an expense; it’s an investment in the future success of your business. With my SEO services, you can expect a substantial return on investment. As your website climbs the search engine rankings, your online presence will expand, attracting quality leads and boosting revenue.

Contact Now

We’re masters of the services we offer.

Stand out from your Competitors by hiring Ratul Roy

Our award-winning services make sure that you get the best results

Website SEO Audit

The website audit is essential to help you know what technical improvements you need for your website. Auditing your website can help your business attract a large audience and will eventually help you increase revenue.

Keyword Research

We have a team of experts that uses the best software and manual efforts to find the best and most profitable keywords for you. The specific and well-researched keywords in your content will help you drive an organic audience and increase your organic traffic in no time.

Competitor Analysis

You can only compete with your competitors when you know how they are applying their strategies. Competitor analysis is a crucial part of every business to keep up the pace. And we are here to keep an eye on your competitors for you.

Technical SEO

Now Google is giving gear priotory in technical SEO. Before on-page, off-page you have to look after for technical SEO such as website speed, all device supported, error issues

On-Page SEO

Do you want to be on the top of Google searches? Well, On-page SEO can help you with that. And we have hired the best and experienced professionals to provide you On-Page SEO services for your website.

Off-page SEO

Our team is not only an expert in On-Page SEO, but we also provide the best off page SEO services to our clients. We provide all the Off-Page SEO services like link building, classified ads, and blog postings.

Why SEO is Important?

SEO is the process which helps Google decide which website will rank higher for each query. It helps to find relevant results for the user. SEO should for optimized for both users and Google.

Through this power of SEO, you can get real and organic leads, customers and traffic, and also branding. So you need a perfect SEO optimizer for that.

In this Modern Era Of the internet, if you want to grow your business online, SEO(Search Engine Optimization) is a must for your business. An average of 53% organic traffic comes from Google Search. So, if you rank your business on Google with white hat SEO, you can get maximum customer, traffic, or leads from search engines. It also builds relationships with your audience and will build your brand popular as well as it will reduce your marketing costs. For growing any business online SEO is a must.

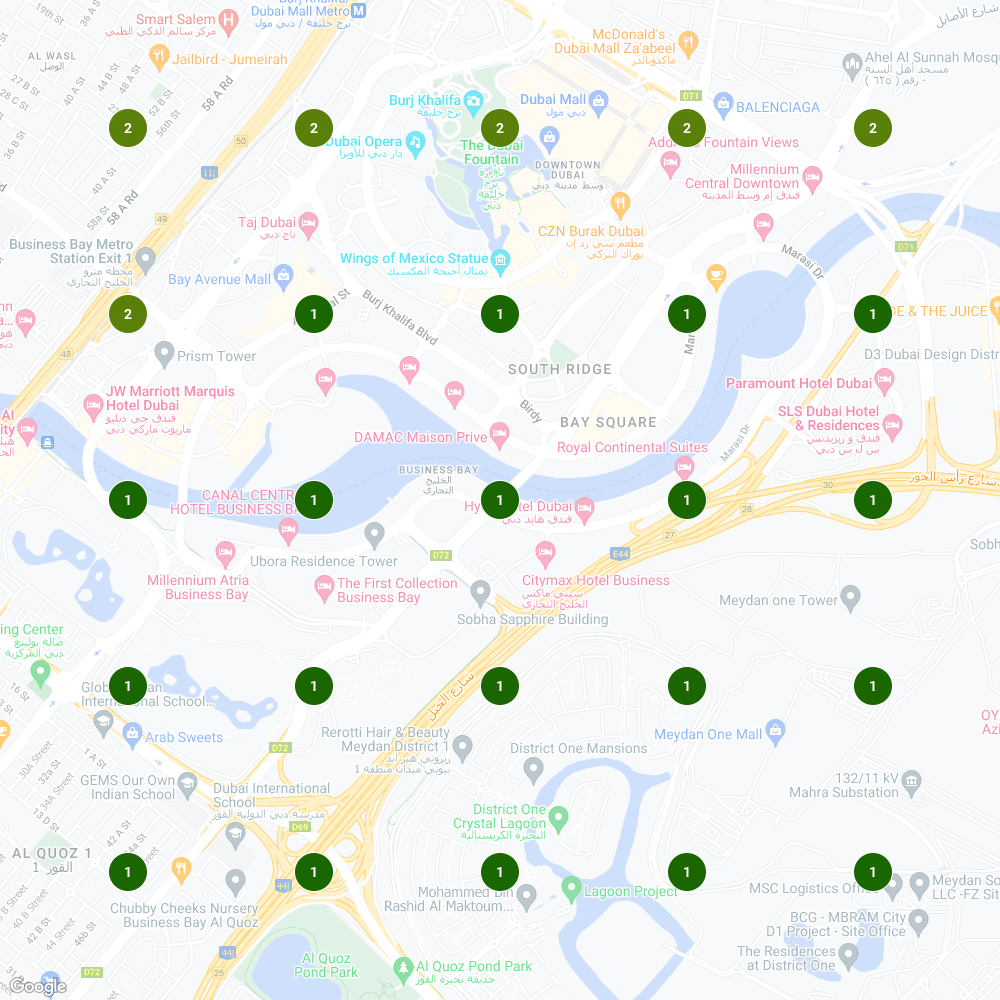

Local SEO Expert in Bangladesh

Ratul Roy is the local SEO Expert in Bangladesh providing local SEO services with a lot of years of experience.

I work with local business in a strategic way, that helps to rank in local pack as well as organic local ranking.

We also provide relevant & targeted traffic from different Search engines Local listing. Local SEO is a powerful way to acquire new clients, leads and grow your business, in the Local. Internet Marketing it's crucial for you to get your Local Listing in Google My Business and Maps with complete details, so that when people search for the service or product you offer via local search terms like "painters in Hyderabad", "plumbers in Delhi", "locksmiths in Mumbai" etc - they can find you easily based on location.

Ratul Roy provides Local SEO services for Local businesses , agencies, small businesses, retailers & websites. We have been helping our customers rank higher online since our founding day. Our team has successfully built tons of Local businesses client base through Local SEO & Local Marketing. We help our Local SEO Expert clients by providing Local Seo services of Local Optimization, Local Search Engine Submission , Local Business Information Validation, online Reputation management of your business on prominent review sites like Yellow Pages, Google+ Local , Yelp & CitySquares .

We are one of the fastest growing Local SEO agency in Bangladesh with 5 star ratings from our clients! get in touch to get Listed or get Ranked.

We can also rank you on google maps for keywords related to your business - find more info about competitive local SEO packages here : Competitive Local SEO Packages

To improve Local visibility and increase foot traffic from nearby towns and cities to a specific place or store front, it is a must for you.

Local SEO ServiceWho is the Youngest SEO Expert in Bangladesh

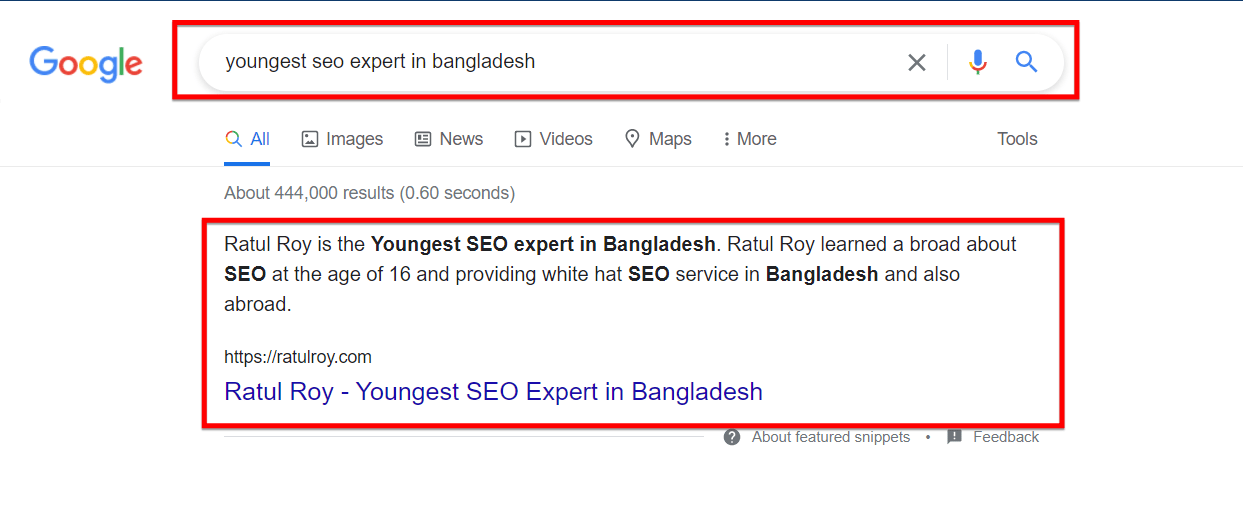

Ratul Roy Is The Youngest SEO expert in Bangladesh because still now I did not see any real Search Engine Optimization expert whose age is less than mine. When my age was 16, I started providing SEO services and did well in the marketplace as an SEO freelancer then started to work outside of the marketplace. I ranked many websites on google with my SEO strategies and experience. I have also expertise on SMM, SEM and CRO (Conversion Rate Optimization)

And for more just search by the term and see the result.

Unleashing the Power of Semantic Keywords: A Comprehensive Guide.

A semantic keyword is a term that shares a contextual...

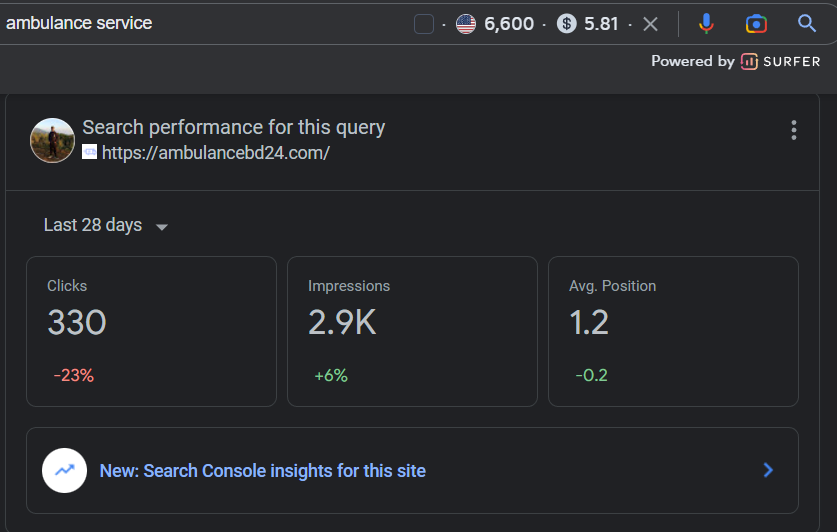

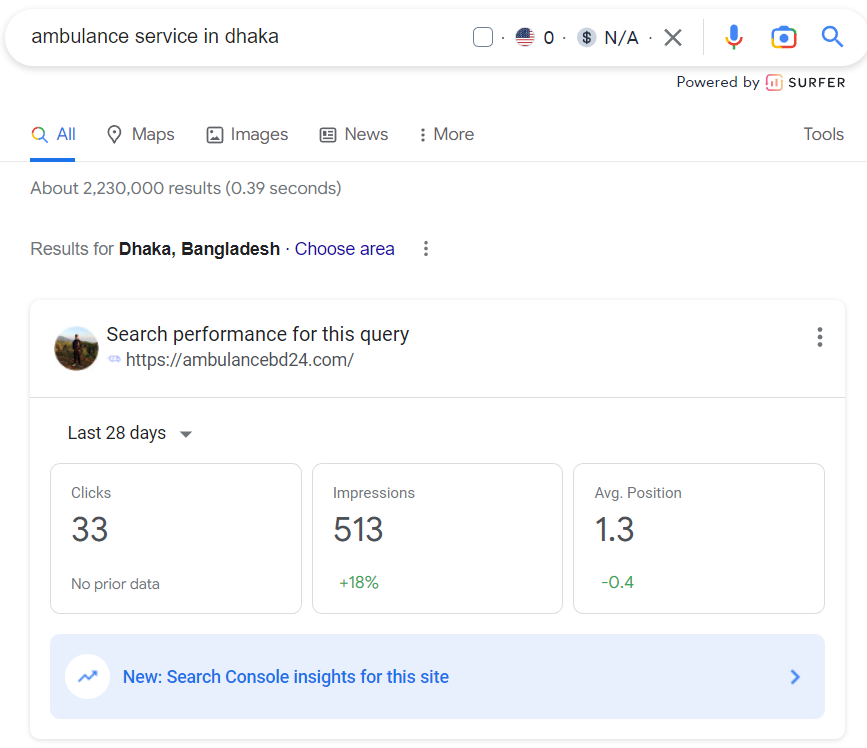

Read MoreHow Our SEO Strategies Helped Ambulance BD 24 Rank for Key Service Keywords

Ambulance BD 24 is a leading ambulance service provider in...

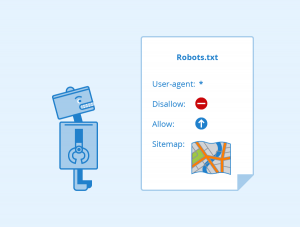

Read MoreWhy robots.txt in SEO? How does that work?

Introduction Robots.txt is a file which you can use to...

Read MoreContact

Lets Make a deal

Contact Me

For any questions or any queries, please message by filling up the below form or you can directly message me on social media.

You will get Social Media Link in footer section

Professional & affordable SEO services Pricing

Let's build your own place on Google at a reasonable SEO package

Sliver

Good for small business or website

- In-depth Website Audit

- Keyword Research & Listing

- Minimum 5 seed keywords

- Total 30+ Keywords

- Maximum 10 Pages Website

- Competitor Analysis

- Strategy Building

- Google Analytics, Webmaster Setup

- On Page SEO

- Depth Technical SEO

- Content audit & Development

- White Hat Powerful Backlinks

- Monthly Clear Report

- Weekly Update and 30 Minute session

Gold

Good for blog or medium business

- In-Depth Website Audit

- Keyword Research & Listing

- 10 Seed Keywords

- Total 60+ Keywords

- Maximum 20 Pages Website

- Competitor Analysis

- Work Plan Development

- GSC, GA Setup

- On Page SEO

- Technical SEO

- Website Redesign Guidance

- Content Audit & development

- Google My Business Optimization

- Social Media Setup

- Powerful Link Building

- Monthly Transparent Report

- Weekly 30 Minutes Session

- Blog Posts Writing (5 Posts)

Diamond

Good for blog, corporate business

- In-depth Website Audit

- Minimum 15 Keyworfs

- Total 100+ Keywords

- Maximum 30 Pages

- Competitor Analysis

- Strategy Development & execution

- GSC, GA Setup

- On Page SEO

- Technical SEO

- Website Redesign

- Content Audit & Development

- GMB setup and Optimization

- Social Media Setup

- Blog Post Writing (10 Posts)

- Backlinks Audit and analysis

- Link Building Campaign Development

- Report in Dedicated Dashboard

- Weekly 30 Minutes Session

How to Find the Best SEO Service Provider

Finding an Experienced SEO Expert in Bangladesh can be a challenging task, but with the right guidance, you can be sure of getting the results you want. Below are some tips to help you find the best one. These experts are located in Bangladesh and have the ability to deliver results for any business. Keep reading to learn more about these experts. Listed below are just some of the things they do. These experts are very skilled in what they do.

A Search Engine Optimization Expert in Bangladesh can help you determine what your target keywords are. The first step in this process is to come up with a list of keywords that best describe your website. This will help you better understand which pages of your website are getting the most traffic. Your SEO Expert in Bangladesh can also help you get started with link building, as well as help you navigate the Google Analytics platform. Many websites don’t make it easy to interpret the data, but a SEO Expert Bangladesh can help you understand the analytics tool.

In addition to keyword research, an SEO Expert Bangladesh will examine competitors in their field and analyze their traffic, user intent, and website structure. A thorough competitor analysis will show you where you can find SEO/ keywords opportunities and improve your presence on the Internet. You can hire an SEO Expert in Bangladesh to optimize your site. It’s worth the investment to get a top ranking. This will help your business grow. There are several important factors that an SEO Expert in Bangladesh must consider when working with a client.

⚠️BE Aware About Hiring SEO Expert⚠️

It’s a matter of sorrow that there are many Search Engine Optimization experts in Bangladesh, actually not only Bangladesh but also abroad they are not updated. They think SEO means link building and some apply black hat SEO Techniques. By black hat SEO, you can rank for a short period, and then you have to wait for a penalty. And some people think SEO means link building so they build quality backlinks without any limitations or rules, and it’s one kind of spam. They apply the traditional SEO method. Google is smarter than before, you have to follow the white hat SEO method. In a black hat way as you get benefits than more you will lose. So, be aware when you choose an SEO expert for your business or website.

Choosing the right SEO expert for your business is important. A professional SEO expert can provide you with the best strategies to improve your website’s search engine rankings. He or she can also help you optimize your site for local customers. If you want your business to succeed in the digital market, an SEO expert will be an invaluable asset. If you’re an online business owner, you’ve already begun to develop a solid white hat SEO strategies.

The best SEO expert is able to improve your website’s position in SERPs. He or she can improve the content of your website and improve your SERPs. He or she can also make your website easy to navigate and suitable for different types of customers. Using an SEO expert can help you reach your target audience and increase your sales. So, let’s know about an SEO expert in Bangladesh and the benefits of hiring one.

A good SEO expert can improve traffic, generate more leads, and help your business grow. A good SEO expert can help your website become more visible and successful by analyzing your website’s competitors. He or she can identify opportunities for improvement and work with the latest Google updates to boost your site’s ranking. And you can trust your SEO expert in Bangladesh to help you with your website. He or she can also provide you with training and guidance to help your business succeed.

An SEO expert is essential to your business’ success. A good SEO expert will be able to guide you through the process and give you a strategy that will help you grow. The process is complicated, but a good trainer will make it easy to understand. And a good SEO expert will be able to answer any questions you might have. If you’re looking for an SEO expert in Bangladesh, you’ll be able to benefit from his knowledge and experienced person.

An SEO expert is an SEO consultant who has a variety of skills. He should have experience in the field, as this is an integral part of a website’s success. It is also important to look for a quality SEO company. A good SEO team will not only help you rank on Google but will also help you attract more customers. A good search engine optimization company will be able to help you increase your revenue and increase organic search positions on Search Engine Ranking.

FAQ

Most frequent questions and answers

Obviously, Ratul Roy is the Youngest White Hat SEO Expert in Bangladesh, from his school life he is becoming skilled and providing SEO services as energetic, young, experienced SEO specialist.

Yes, Ratul Roy is an SEO Expert in Bangladesh. He is also known as Youngest SEO Expert in Bangladesh.

In my SEO service you will get:

- Website Audit

- Competitor Analysis

- Keyword Research

- Technical SEO

- On-page SEO

- Off-page SEO

- Monitoring and Tracking

- Branding

- Social Media Marketing(Optional)

To be Honest, there is no fixed budget to hire me. It may vary depending on your domain condition, industry/niche.

White Hat SEO always take time. I don’t provide any SEO service which shows result overnight. Organic SEO usually takes 2 to 3 months. Even for new domain it needs 6 months to show result by proper SEO strategies.

I always work for develop any business in online. I want to provide a value which can improve the condition of your business. I try to give guaranteed ROI(Return on Investment).

Ratul Roy is the best local SEO expert in Bangladesh, don’t need to trsut me, trust google.